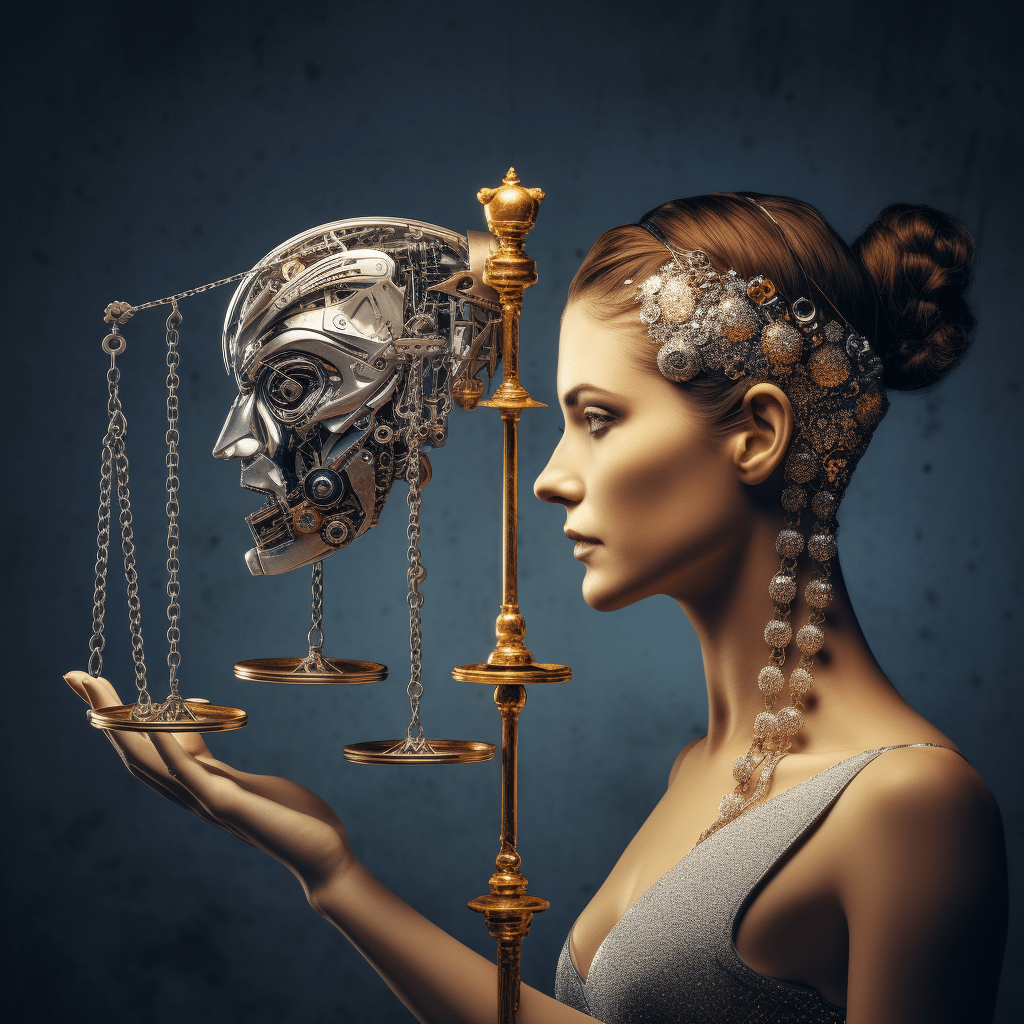

AI and Online Content Moderation: Striking a Balance

AI-powered content moderation, In today’s digital age, where online platforms play a vital role in connecting people from around the world, content moderation has become an important aspect to ensure a safe and inclusive online environment. With the exponential growth of user-generated content, the process of manually reviewing every piece of content has become nearly impossible. This is where Artificial Intelligence (AI) comes into play.

AI-powered systems have revolutionized content moderation by reducing human effort and time required for reviewing vast amounts of content. Machine learning algorithms and natural language processing techniques allow AI to analyze and categorize content based on predefined rules and patterns. While AI moderation holds great promise, striking a balance between automated content filtering and human involvement is crucial.

The Benefits

AI-driven content moderation systems offer several advantages. Firstly, they improve efficiency by automatically filtering out harmful or inappropriate content, such as hate speech, harassment, or graphic violence. This significantly reduces response time as potentially harmful content is promptly removed, preventing it from spreading further.

Secondly, AI moderation systems can help maintain consistency in the application of content policies. By following predefined rules, AI algorithms can ensure that all user-generated content is evaluated objectively, without any biases or personal beliefs that might affect human content moderators.

The Challenges

AI-powered content moderation, However, AI content moderation systems face certain challenges. Machine algorithms may struggle to comprehend complex context-specific nuances, leading to false positives or negatives in content filtering. Understanding sarcasm, cultural references, or the subtleties of different languages is still a challenge for AI.

Another concern is over-reliance on automated systems. While AI can handle a significant portion of content, human moderation is necessary to make nuanced decisions in cases where context is essential. Collaborative efforts between AI technology and human moderators are needed to minimize flaws in content filtering.

Striking a Balance

To strike a balance in content moderation, online platforms must combine AI and human moderation efforts. By employing AI systems to filter and flag potentially harmful content, platforms can ensure a safe environment while reducing the workload on human moderators.

However, human review should still be an integral part of the process. AI algorithms can provide initial filtering, but humans should be involved in assessing questionable content and making final decisions. Platforms can implement user feedback mechanisms to continuously train and improve AI systems, ensuring they adapt to evolving user behavior and new challenges.

Ultimately, the goal should be an ecosystem where AI-powered content moderation and human involvement coexist harmoniously. Both have unique strengths to offer when it comes to evaluating context and understanding subjective nuances.

While AI-powered content moderation systems continue to evolve, finding the right balance of automated filtering and human expertise will remain essential in creating a safer, more inclusive online community.

What ethical considerations should be taken into account when implementing AI-based content moderation systems

AI-powered content moderation, There are several ethical considerations that should be taken into account when implementing AI-based content moderation systems:

1. Transparency and Accountability:

It is essential to ensure transparency in how the AI system moderates content and the criteria used for decision making. Users should have a clear understanding of why certain content is being flagged or removed. Additionally, accountability measures should be in place to address any errors or biases in the system.

2. Bias and Discrimination:

AI systems can inherit biases from the data they are trained on and may inadvertently discriminate against certain groups or viewpoints. Developers should continuously monitor and address any bias in the algorithm and ensure fair treatment of all users, regardless of their demographic characteristics or opinions.

3. Freedom of Expression:

Content moderation systems should respect the principle of freedom of expression and avoid disproportionately censoring or suppressing legitimate speech. Balancing the need for moderation with the preservation of free speech rights is crucial.

4. User Privacy and Data Protection:

AI systems for content moderation often require access to user data for analysis. It is crucial to handle this data responsibly, ensuring user privacy and adhering to data protection regulations. AI systems should only collect and retain the necessary data and should take measures to secure it appropriately.

5. Human Oversight and Decision Making:

While AI systems can automate the content moderation process, there should be human involvement in the decision-making process. Human moderators should have the ability to review and override AI decisions, especially in cases that require contextual understanding or cultural sensitivity.

6. User Empowerment and Appeals:

Users should have the ability to appeal content moderation decisions and have a clear process through which they can do so. It is crucial to empower users and provide them with avenues to challenge unfair or incorrect moderation actions.

7. Continuous Evaluation and Improvement:

Content moderation systems should be under constant evaluation to assess their impact on users and their overall effectiveness. Regular audits, feedback mechanisms, and algorithmic transparency can help identify and address any ethical issues that may arise.

Overall, ethical considerations in AI-based content moderation systems should focus on fairness, transparency, accountability, protecting user rights, and striking a balance between moderation and freedom of expression.

How can artificial intelligence effectively strike a balance between moderating online content and respecting freedom of speech?

AI-powered content moderation, Artificial intelligence (AI) can play a significant role in moderating online content while respecting freedom of speech by adopting the following strategies:

1. Clear guidelines and user controls:

AI algorithms should be trained with transparent rules and guidelines that clearly define what content is considered unacceptable. Moreover, users should have control and customization options to define their own content filtering preferences.

2. Continuous human oversight:

AI moderation should always have human moderators involved to review and verify any actions taken. Humans possess contextual understanding, empathy, and nuanced judgment, allowing them to better interpret complex cases.

3. Context-aware algorithms:

AI models should be trained to consider the context in which content is posted. This includes understanding the intent, cultural nuances, and historical context surrounding certain phrases or images to reduce false positives in content moderation.

4. Collaborative efforts with the user community:

Platforms can involve users in the moderation process by allowing them to flag inappropriate content or provide feedback on AI decisions. This helps in refining the AI algorithms and ensures a collective effort in content moderation.

5. Transparency and accountability:

AI algorithms and their moderation decisions should be transparent to both users and content creators. Users should have the ability to understand and question AI decisions, and platforms must be accountable for their AI systems’ actions.

6. Periodic algorithmic audits:

Platforms should regularly conduct audits of their AI moderation systems, ensuring fairness, accuracy, and a reduction in biases. This can be done by involving independent third-party organizations or creating dedicated internal teams to assess the system’s performance.

7. Continuous improvement through machine learning:

AI models can be trained using reinforcement learning techniques to continuously improve their effectiveness in content moderation. Regular updates and retraining should be conducted to adapt to evolving user behavior and deceptive tactics.

8. Considering diverse perspectives:

AI moderation systems should be trained on diverse datasets that represent a wide range of viewpoints and cultures. This helps in reducing bias against certain groups and ensures a fair representation of different perspectives.

9. Empowering users with counter-speech:

AI can proactively promote counter-speech and positive engagement to combat hate speech and misinformation. By highlighting constructive conversations and providing fact-checking resources, AI can encourage healthy dialogue and minimize the need for heavy-handed moderation.

10. Open research and collaboration:

The AI community should actively promote research and collaboration to develop shared best practices for responsible and fair content moderation. Open discussions, sharing of techniques, and collective efforts can lead to more balanced and ethically sound AI systems.

By combining these approaches, AI can contribute to effective content moderation while respecting freedom of speech, thereby benefiting online communities and fostering a more inclusive and open online environment.

What are the potential drawbacks and challenges of relying solely on AI for online content moderation?

AI-powered content moderation, There are several potential drawbacks and challenges associated with relying solely on AI for online content moderation:

1. Lack of Contextual Understanding:

AI may struggle to comprehend the context and intent behind messages, leading to misinterpretation and potentially censoring innocent content or missing harmful content.

2. Bias and Inaccuracy:

AI algorithms are trained on datasets created by humans, and if these datasets include biased or discriminatory content, AI may replicate and amplify those biases. This can inadvertently discriminate against certain groups or viewpoints.

3. Constantly Evolving Tactics:

As users find new ways to bypass AI-based moderation tools, the technology must be regularly updated to keep up with evolving tactics. This constant battle may lead to a persistent game of catch-up.

4. Lack of Emotional Intelligence:

AI may fail to understand the nuances of human emotions, humor, and sarcasm. It could mistakenly flag or remove content that is harmless or intended as a joke, causing frustration among users.

5. Over-reliance on Automated Solutions:

Depending solely on AI for content moderation may discourage human moderators from critically evaluating and understanding the nuances of user-generated content, potentially leading to the loss of human judgment and empathy.

6. Legally and Ethically Complex Content:

Some content moderation decisions involve legal and ethical gray areas that require interpretation and discretion. AI algorithms may struggle in making such nuanced judgments, potentially leading to inappropriate or inconsistent outcomes.

7. Lack of Accountability:

AI algorithms are created and maintained by humans, but the decision-making process behind the algorithms can sometimes lack transparency. This lack of accountability raises concerns around who is responsible for the decisions made by AI systems.

8. Unintended Consequences:

Over-reliance on AI content moderation can lead to unintended consequences, such as the stifling of free speech, censorship of legitimate content, or the creation of echo chambers where diverse perspectives are suppressed.

These challenges highlight the need for a balanced approach to content moderation that combines AI tools with human judgment and oversight, ensuring a more accurate, ethical, and contextually sensitive moderation process.

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.